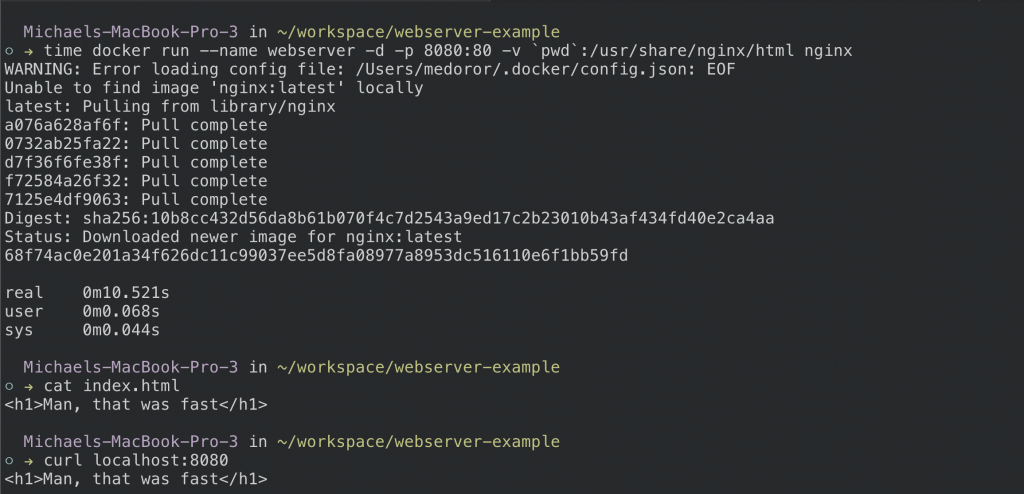

Need to serve files on localhost? Do it 10 seconds using nginx, docker, and the following one-liner

docker run --name webserver -d -p 8080:80 -v /some/content/to/serve:/usr/share/nginx/html nginxSee you files on your browser by navigating to localhost:8080. By default, the server will look for an index.html from the mounted volume so add one for a homepage. See here for more information about the nginx docker image

-d – Daemon mode. Runs the docker image in a background process

-v – Mount a volume. Maps files from your local machine to a location on the dockerfile. The above example takes the index.html and places it into the default location for nginx to serve files.

-p – Expose ports. Maps internal port 80 on docker to port 8080 of the host machine.

The result

Want to take it to the next level and serve these files over the web? Follow the steps here!